Products And Processes: How Innovation and Product Life-Cycles Can Help Predict The Future Of The Electronic Scholarly Journal

PDF Version available on conference web site

Andrew Treloar

Senior Lecturer in Information Management

School of Computing and Mathematics, Deakin University

Andrew.Treloar@deakin.edu.au

- http://www.deakin.edu.au/~aet/ (link repointed)

Keywords

Innovation, life-cycles, electronic journals, processes, products

Introduction

We live in a time of change in the scholarly journal industry. This change predates the arrival of electronic publishing, but the increase in new technologies has accelerated many of its aspects. Indeed, many of the latest advances in e-journals have only been made possible by the development and adoption of new technologies such as multimedia personal computers, the Internet and World Wide Web, Adobe’s Portable Document Format (PDF) and so on. Such new technologies follow broadly predictable life-cycles which influence their adoption rates. What does the literature of technology life-cycles have to say about e-journals and how can it help us plot their future? This paper starts by considering technology life-cycles and adoption rates in general. Next it considers product and process innovation. It then looks at these life-cycles in the personal computer industry, before concluding by considering the implications of these concepts for e-journals in the near to mid-term.

Technology life-cycles and adoption rates

We live in an era of ever-increasing change. One of the most obvious indicators of this change is the bewildering diversity of products available to purchase and use, and the rate at which these products become technologically obsolete. The field of product development and product life-cycles has been intensively studied during the last half of this century, and the last decade has seen a particular focus on high-technology products: computers, consumer electronics and the like. A number of these researchers have sought to identify patterns in the life-cycle of typical products.

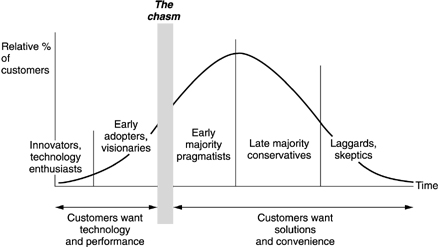

The standard text in this field is by Everett Rogers, now in its fourth edition [Rogers, 1995]. Rogers classifies stages in the technology life-cycle by the relative percentage of customers who adopt it at each stage. Early on are the innovators and early adopters (who are primarily concerned with the underlying technology and its performance). Then come in succession the early majority pragmatists, the late majority conservatives and lastly the laggards (all of whom are much more interested in solutions and convenience).

Moore [Moore, 1991] drawing on Rogers’ work, depicts the transition between the early adopters and early majority pragmatists as a chasm that many high- technology companies never successfully cross (see figure 1).

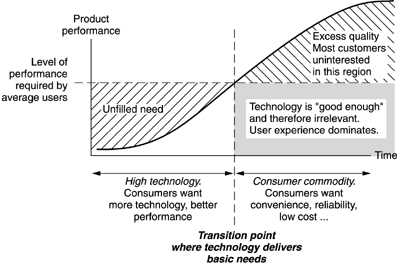

Christensen focuses instead on why new technologies often cause problems for existing industries. He argues that innovative technologies are often underrated or viewed as inferior by the dominant players until it is too late: the new technologies have achieved a momentum towards adoption and the environment for the existing industries is changing around them and out of their control. Christensen prefers to look at the phenomenon of technology take-up from the perspective of the level of performance required by average users (those in the early and late majority categories in figure 1) [Christensen, 1997]. He argues that once a technology product meets customers’ basic needs they regard it as ‘good enough’ and no longer care about the underlying technology (see figure 2). This idea of technologies as ‘good enough’ is a very powerful one, as we will see later.

Figure 1: Change in customers as technology matures.

Source: Norman1998. Used with permission.

Figure 2: Moving from high technology to consumer

commodity.

Source: Norman 1998. Used with permission.

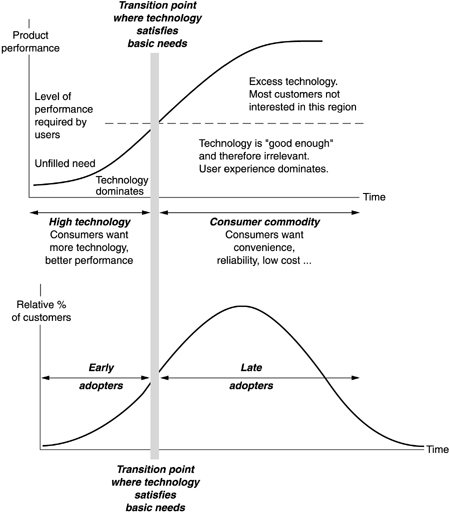

Norman argues persuasively that the two curves in figures 1 and 2 are in fact telling the same story [Norman, 1998]. It is only when the technology becomes good enough that the chasm between early adopters and late adopters is crossed (see figure 3). It is the very process of innovation that provides this improvement in technology and drives the shift from high technology product to consumer commodity.

Figure 3: The transition from technology-driven to

customer-driven products.

Source: Norman 1998. Used with permission.

Products and innovation

So how do innovative products come into being, and why are they adopted? The answer depends on the type of product and the type of innovation.

Product types

Classical economics distinguishes between two types of goods: substitutable and non-substitutable.

A good is substitutable if an alternative can be found by the user that meets their needs equally well and if the cost to switch is low or zero. Most traditional products fall into this category: cars, food, clothing, consumer electronics. Note that much advertising is devoted both to suggesting that such goods are not substitutable (Coke is not the same as Pepsi) and yet paradoxically urging users to substitute their consumption and switch their allegiance. With such substitutable products, a company does not need a dominant market share. Indeed, there may well be a large diversity of such products in a given market segment.

In contrast, a non-substitutable good cannot easily be switched for another. Donald Norman [Norman, 1998] points out that infrastructure products (like network switches or operating systems) are inherently non-substitutable. An infrastructure product provides a platform on which other products can be layered. To switch from one to another carries a large cost and consumers are therefore reluctant to switch unless they have to. Infrastructure goods also benefit from what economists call network externalities [Agre1995]. They naturally tend to dominance of one standard as the benefits to the adopters of that standard increase with its relative proportion of market share. Examples of such non-substitutable goods include OSI vs. TCP/IP, the MacOS vs. Windows, and Beta vs. VHS. Non-substitutable goods are more resistant to product life-cycles because adopting them involves a large cost. This does not mean that users of such goods will never shift; they will, but only if the advantages of doing so are strong enough.

Innovation

The process of moving from left to right on the graph shown in figure 3 requires innovation. This innovation is typically taken to refer to product innovation.

Product innovation involves coming up with something new (or sufficiently different). Successful startup companies begin with product innovation; they either come up with a significantly better product or a new product altogether (like the first Web browser). Small nimble companies find it much easier to do this in part because they are willing to take risks (and because they have less to lose). Organisations at this stage are often characterised by what has been called the ‘handcraft syndrome’: everything is done by hand with few systems in place to automate things (in part because everything is new and being developed ‘on the fly’).

Rogers [Rogers, 1994] points out that sometimes it is necessary for multiple innovations to be adopted at the same time. As an example, it is not possible to introduce cars that use hydrogen as a fuel without providing a way to refuel such cars and an infrastructure to support such refuelling. Hahn and Schoch [Hahn & Schoch, 1997] call this an innovation cluster - adopters of an innovation do not have to adopt all members of the cluster but if they adopt one it is more likely that they will adopt others.

Utterback argues that innovation with respect to processes is at least as important as product innovation [Utterback, 1994]). Process innovation involves changing the way the company does things to do them better (but without necessarily changing the product). Once a product is established the company needs to transition to process innovation to bring the price down and/or raise the quality. Making this transition from product to process innovation is critical to moving from a high technology product to a consumer commodity product. This has sometimes been characterised as moving from handcraft to the Industrial Revolution - automation is used more and more to ensure consistency and to reduce costs. At about the same time that companies move into process innovation, their products usually move past the transition point where the underlying technology satisfies the users’ basic needs. It has become ‘good enough’ and the late adopters (who only care about convenience, reliability and low cost) embrace it.

Personal computer technology life-cycles

The personal computer and its associated technologies are an example of an industry sector which follows these life-cycles. In fact, the personal computer can be considered as a technology stack that starts at the lowest level with the hardware layer, then moves to the operating system layer, then adds the network layer and finally the application layer. Each of these levels may be at different stages in the technology life cycle. What is the current status of each of these levels (with a particular emphasis on technologies most relevant to electronic publishing)?

Hardware

The majority of personal computers today (with the exception of high-end specialist machines and notebooks) use commodity hardware for their motherboards, processors, secondary storage, memory and displays. Every segment of the market contains multiple suppliers who are all actively innovating and competing on cost, convenience and reliability. Moore’s law ensures that performance/dollar continues to double every 12-18 months (depending on the type of hardware). This performance curve is likely to operate into the near future (the next five years at least). This means that each year either the performance available for a particular amount increases or the cost to buy a fixed amount of performance decreases. Unfortunately, a variety of factors mean that users’ expectations rise at about the same rate as available performance, meaning that the computer one wants always costs about the same amount each year (although it keeps improving in performance).

The performance of the various hardware segments (CPU, memory, secondary storage, display) passed the ‘good enough’ point some years ago, and most consumers now don’t particularly care about the technical details of their system. They just want something that works. Computer games players are an exception here. Computer games are at the leading edge in the demands they place on computer hardware (particularly the graphics sub-system) and the players (and producers) of such games are continually demanding increasing graphics performance. The shift towards commodity hardware is sufficiently far advanced that a number of companies in the U.S. have announced plans to provide a free computer provided that consumers sign up to use a particular Internet Service Provider (ISP) [Sullivan, 1999] or commit to divulging personal information and using a browser with embedded advertising [MSNBC, 1999].

Peripherals

The two critical peripheral categories for electronic publishing are screens (for display) and printers (for output).

The three dimensions of displays that matter are the physical size, the display resolution and colour depth. Of these, display resolution has been lagging other developments to a significant extent. A decade ago, 72 dots per inch (dpi) was the standard resolution. Today 96 or 100 is routinely available. This is a very small improvement, and means that the information density of a typical screen is still much lower than print. This slows reading time and reduces the quality of the online reading experience. Physical size has grown (and become cheaper) so that 15 inch or 17 inch CRT displays are now quite affordable, providing a much-needed increase in screen real estate. Colour depth has also now increased so that 24-bit colour is standard on new machines. Displays are now ‘good enough’ for most users although higher resolution and affordable larger sizes would be very welcome.

Printers used to be expensive, slow, low resolution and monochrome. Technology advances in the field of both ink-jet and laser printers have addressed all of the above concerns. Ink-jet printers are now available at costs that are small percentages (of the order of 10-20%) of the cost of an entry-level computer. Low-end ink-jet printers typically have running costs of less than US$0.05/page for monochrome output or less than US$0.20/page for colour output, print faster than a page/minute, have resolutions of at least 300 dots per inch (dpi) and provide reasonable colour quality. Higher-quality ink-jets provide faster printing speeds, resolutions of up to 1400 dpi and excellent colour output. Laser printers are approximately twice as expensive as inkjet printers and are restricted to monochrome output (unless one wants to pay a lot of money) but offer much faster printing (4-6 pages per minute), support for Postscript, and better resolution (usually 600 dpi). The affordability of cheaper printers (and the spread of networking as discussed below) means that most professionals should be able to print out monochrome articles as desired, with an increasing proportion being able to print out colour as well.

Operating Systems

The development in operating systems has gone hand in hand with these advances in hardware (particularly CPU speed and secondary storage). Faster CPUs have enabled a greater percentage of the CPU to be allocated to providing a better user experience (and adding unnecessary bells and whistles). Graphical user interfaces are now standard and software companies are working on providing more user assistance (in the form of software assistants) as well as additional input choices like voice recognition. Larger hard disks make possible more bloated (and more functional) operating system and application code to the extent that four gigabytes is barely enough for most users. The Mac OS passed the ‘good enough’ point in the late 1980's and Windows passed it with the release of Windows 95. Microsoft’s dominance of the personal computer OS market means that the operating system is also now a consumer commodity. As already argued, operating systems are examples of non-substitutable infrastructure goods. Switching from one to another requires the user to relearn the way they work with a computer, purchase completely new software and perhaps even lose data in the migration. Few users are prepared to make such a move unless absolutely necessary. In the current Department of Justice trial in the United States (probably resolved by the time this paper is delivered) Microsoft have been attempting to argue that operating systems are substitutable and their current monopoly position could be overturned very quickly (they have suggested as possible candidates the Be OS, Linux and OS X from Apple). Their arguments have not convinced the judge or those reporting on the case.

Networks

The network space is one where the last two decades have seen a transition from one non-substitutable technology to another. This is, of course, the transition of network protocols from ISO/OSI to TCP/IP. The transition occurred for at least three reasons. TCP/IP is a superior technology in many respects, it is more open, and the ascendancy of the Internet (based on TCP/IP) drove the network standards demanded by consumers. Most such consumers users of personal computers only developed a need for access to wide area network connectivity after the transition had taken place and have therefore inherited a mature networking technology. TCP/IP is now built into all major personal computer OSs (Windows9X, MacOS and Linux) as the default WAN protocol. As with the OS, TCP/IP access is expected by users to be there and to work. Local area networks have standardised on Ethernet as the protocol and 10-BaseT cabling for for the cabling, with fibre optics usually used for organisation or campus backbones.

Applications

The two critical application technologies for electronic publishing at the moment are the Web browser (and HTML format documents) and Adobe’s Acrobat technology (and Portable Document Format (PDF) documents). The overwhelming majority of e-journals use one or both of these formats for delivery of content.

The browser (as evidenced in Netscape Communicator and Microsoft's Internet Explorer) crossed the chasm on both the graphs shown in figure 3 at about release 3.0. As far as product performance goes, version 3.x browser technology is clearly ‘good enough’. In fact, many large organisations still have version 3.x as part of their standard operating environment. It satisfies the basic needs for browsing standard HTML and supports additional features like tables and Javascript. As far as the number of users are concerned, it was at about version 3.x that new users of Web browsers (and by extension the Web) moved from being early adopters to early majority pragmatists. The further transition to late majority pragmatists is occurring now, with URLs routinely placed as part of advertising campaigns, listed on TV show credits and provided as part of radio broadcasts. Government departments are rapidly becoming Web-enabled and the Web as a whole is becoming part of popular culture. It is no longer the specialist niche phenomenon we discussed at the first of these conferences.

Adobe’s PDF technology is now mature and well-accepted. Version 4.0 of Acrobat has just been released (in January, 1999), providing extra features, better workflow support and better Web integration [Adobe, 1999]. After Adobe’s disastrous mistake in trying to charge for the reader software (back in version 1.0), the Acrobat reader is now widely available and routinely installed on new computers. The performance of current entry-level computers is sufficient to image PDF documents quickly. As with the Web, it was about at version 3.0 that Acrobat technology could be regarded as good enough to support large-scale use.

Implications for e-journals

What then are the implications of all this for the future of ejournals? How can an understanding of product life cycles, innovation strategies and adoption curves help us predict developments in a notoriously fluid area? The research presented in this paper does not provide a guaranteed answer, but it does at least help to clear some of the fog from the crystal ball. The implications for e-journals can be summarised under four distinct areas: e-journals as products, availability of technologies, user transitions and publisher transitions.

Ejournals as products

It is important to recognise at the start that journals can be thought of as products, sharing many of the characteristics (but not all) of other product types, or what classical economics calls goods.

Journals appear on the surface to be substitutable goods — there are typically multiple alternative journals in any given discipline, each with differing status, coverage and price. However, in practice, they behave as examples of non-substitutable goods, where the user (either an individual or institutional subscriber) does not easily substitute one for another. Journals are different to most non-substitutable goods in that they are not infrastructure goods in the sense discussed already. They do not provide an infrastructure on which other things can be built in the sense of a networking standard. However, they do share some of the characteristics of infrastructure goods in the sense that particular journals are embedded in the scholarly disciplines they serve. Such journals have the most prestige, they are the best-known and recognised brand for that discipline and they enable a wide range of other scholarly interactions. These might include legitimation by ones peers, timely and effective communication of results to a forum that is read by a majority of readers (or at least, the readers that matter), and archiving in a forum that is not likely to disappear. This link between the embedding of a journal and the activities in a particular discipline is one explanation for why journals are infrequently substituted. Other factors are probably also important. For an individual user, they may be used to the ‘look and feel’ of a particular journal or it may have more prestige in their field. For a library, maintaining continuity of holdings may be important or it may be more difficult to source a particular title.

This suggests that getting readers to change from an existing print journal to a different e-journal may be difficult. Existing journal brands that make themselves available in electronic form (in addition to print or ultimately as a replacement for print) are likely to remain more attractive because of the factors already discussed. Of course, if the price of one good becomes much too high, then consumers may well feel under pressure to decide to switch. As an example, the American Chemistry Society (ACS) is planning to produce a low-cost e-journal to compete with Elsevier’s US$10,000/year Tetrahedron Letters [Kiernan, 1998]. Because of the prestige and backing of the ACS, this initiative has more chance of succeeding than otherwise.

Technology availability

One of the problems designers of e-journals have faced in the past has been the need for readers to have access to particular technology in order to read their publications. As these technologies (already discussed) move from high technology to consumer commodity, they will become much more widespread and readily available. These necessary technologies can now be assumed to exist by the publisher. The need for specialised e-journal clients [Keyhani, 1993] is therefore a thing of the past. In other words, access to technology is no longer a constraint on e-journal design (with the caveat that one can never have enough RAM or network bandwidth).

User transitions

As already discussed, the problem with introducing any new technology is how to get past the early adopters and ‘cross the chasm’. Norman's model predicts that once a technology is mature, customers want solutions and convenience [Norman, 1998]. The user experience is therefore what dominates. Early adopters are very forgiving of user interface and performance problems, but once the technology is ‘good enough’ most users have little interest in further technology improvements. This means that new technologies like Metadata and XML will have very little impact with the user except insofar as they improve the user experience. As far as navigation and display of journal content is concerned, HTML and PDF are arguably good enough now. To ensure mass-market take up of e-journals it is now necessary to focus on the user experience and on marketing.

One publisher that has done an outstanding job on this has been Stanford University’s Highwire Press <http://highwire.stanford.edu/>. They have spent a lot of time thinking about how to use the new capabilities of the e-journal to provide the best user experience and have consciously embedded connections to scholarship into their e-journal products. Their e-journals all provide:

- direct searching by author, title keywords or text words, both within journals and across journals

- display in PDF (best for printing) or HTML (best for navigation)

- automatic creation of hyperlinks to MEDLINE citations provided by the National Library of Medicines PubMed service

- links from Genbank accession numbers to full Genbank records

- bidirectional links between citing articles and cited references (where available) [Newman, 1997]

- "‘toll-free’ links between the references from one journal article to the full text of the cited article" [Reich, 1997a].

- Highwire have surveyed their stakeholders to identify popular features and barriers to uptake [Gotsch and Reich, 1997]. They have also used the existing networks within related scholarly disciplines to market their journals and solicit feedback on desirable additional features.

It is important to note that users of electronic publishing innovations include both authors and readers (although these groups overlap to some degree). The motivating factors will be different for each group. Readers will be more concerned with things like useability of the final product and additional functionality in terms of searching for and working with articles. Authors will be more concerned with things like extra workload when creating articles, increased visibility for their ideas once published, and the ability to communicate more effectively to their peers through the use of hypermedia features that were not available in print.

Publisher transitions

The other group that needs to make a transition is the publishers, but in this case the transition is from product to process innovation. The majority of early e-journals came out of product innovation and came from new publishers who because of their size (and lack of historical baggage) found it easier to innovate. The challenge for e-journal publishers now is to move on to sustainable process innovation. This is a difficult task both for new and existing publishers.

New publishers need to move from the cottage-craft stage to the industrial revolution. Hand-crafting HTML for a single journal that comes out four times a year is very different to managing a stable of journals that come out monthly or weekly! Such publishers need to invest heavily in automation technology that will ensure as much consistency and reproducibility in production processes as possible (and that will automate as much of the routine work as possible). Highwire Press rewrote their systems three times in the first few years to make sure that they got this right [Reich, 1997b]. An indication of their success is that they were then able to add five dozen new titles in 1998.

Existing publishers face a different transition. They have highly-tuned production systems which reflect a considerable amount of process innovation, but for the wrong delivery mechanism. Their systems are tuned for print delivery and must now adapt to parallel delivery (print and electronic) now and most likely electronic only at some point in the (near?) future. This requires a considerable re-engineering effort and may be very difficult for some. It is quite possible that coming from print may hamper their efforts to make optimal use of this new medium. Process innovation when the process environment is undergoing a fundamental change is no easy thing.

Role of innovation clusters

Hahn and Schoch [Hahn & Schoch, 1997] have suggested that examining clusters of innovations may be the best way of considering the diffusion of electronic publishing. They identify the following possible categorisation of such innovations:

- innovations in publishing roles

- distribution and retrieval innovations

- innovations in document structure

- innovations in the validity of research

- post-publication innovations

- sale and pricing innovations

- storage innovations.

Each of these categories has a number of possible members. For instance, storage innovations might include publisher storage, designated archives, individual library initiatives and distributed cooperative archiving. Different e-journal projects will adopt varying combinations of the above innovations and will also make different choices within each category. Choices in one category (for instance hypertext documents) will govern possible choices in other categories (in this example leaning towards Web distribution)

Hahn and Schoch point out that the stakeholders in electronic publishing (they identify readers, authors and publishers as the main groups - they weaken the crucial role of libraries and omit altogether scholarly societies) are interdependent. This requires a critical mass of adopters from all groups in order for any transition to become self-sustaining. They suggest that differing scholarly communities will adopt innovations at differing rates. One striking example of this that comes to mind has been the success of the pre-prints archive within the high-energy physics community [#Ginsparg].

Conclusion

The concept of e-journals as products, product versus process innovation and the model of technology life-cycles outlined in this paper enables us to better understand where the e-journal has come from and where it is going. Understanding the implications of the shift from high technology product to consumer commodity will be critical to planning for future changes in the e-journal, and by extension to changes in scholarly communication itself. Change in itself is not bad, but it can be if the fear of change hampers our ability to take best advantage of what that change has to offer.

Acknowledgements

I wish to acknowledge the perceptive and useful comments made by the two reviewers. This paper has been significantly improved as a result of their input.

References

Adobe Corporation (1999). Acrobat 4.0 FAQ. Available online at <http://www.adobe.com/prodindex/acrobat/PDFS/acr4faq.pdf>.

Agre, P. E. (1995). Designing genres for new media: Social, economic, and political contexts. The Network Observer, 2(11). Available online at < http://communication.ucsd.edu/pagre/tno/november-1995.html#designing>.

Christensen, C. M. (1997). The innovator's dilemma: When new technologies cause great firms to fail. Boston: Harvard Business School Press.

Ginsparg, P. (1996). Winners and Losers in the Global Research Village. Invited contribution for Conference held at UNESCO HQ, Paris, 19-23 Feb. 1996, during session ‘Scientist's View of Electronic Publishing and Issues Raised’ Wed 21 Feb. 1996. Available online at <http://xxx.lanl.gov/blurb/pg96unesco.html>.

Gotsch, C. H. and Reich, V. (1997). Electronic publishing of scientific journals: Effects on users, publishers and libraries. Available online at <http://www-sul.stanford.edu/staff/pubs/clir/Final.html>.

Hahn, K L, and Schoch, N A. (1997). Applying diffusion theory to electronic publishing: A conceptual framework for examining issues and outcomes. Proceedings of the ASIS Annual Meeting 1997, Vol. 34, pages 5-13. Available online at <http://www.asis.org/annual-97/hahnk.htm>.

Keyhani, A. (1993). The online journal of current clinical trials: an innovation in electronic journal publishing. Database, 16(1) February., 14-23.

Kiernan, V. (1998). University libraries join with chemical society to create a new, low-cost journal. Chronicle of Higher Education, page A20.

Moore, G. A. (1991). Crossing the chasm: Marketing and selling high-tech goods to mainstream customers. New York: HarperBusiness.

MSNBC (1999). Free PC offer gets plenty of takers. MSNBC, February 8, 1999. Available online at <http://www.msnbc.com/local/wnbc/296752.asp>.

Newman, M. (1997). The Highwire Press at Stanford University: A review of current features. Issues in Science and Technology Librarianship, Summer. Available online at <http://www.library.ucsb.edu/istl/97-summer/journals.html>.

Norman, D. (1998). The invisible computer: why good products can fail, the personal computer is so complex, and information appliances are the solution. Cambridge, MA: MIT Press.

Reich, V. (1997a). Personal communication. Via email.

Reich, V. (1997b). Personal communication. Case-study interview.

Rogers, E. M. (1995). Diffusion of innovations. Fourth edition. New York: The Free Press.

Sullivan, B. (1999). Free Net access gains steam. MSNBC, Feb. 8, 1999. Available online at <http://www.msnbc.com/news/239439.asp>.

Utterback, J. M. (1994). Mastering the dynamics of innovation. Boston: Harvard Business School Press

Copyright Andrew Treloar, 1999. Last modified May 11, 1999.